Published by: Dikshya

Published date: 23 Jul 2023

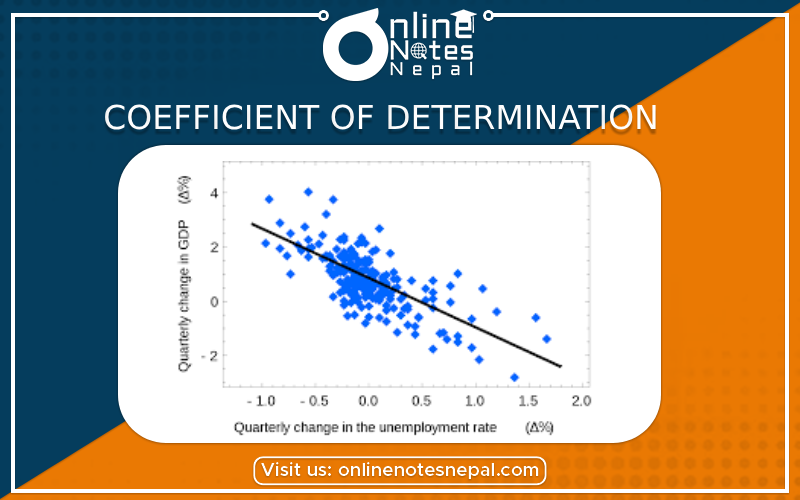

Coefficient of Determination

The coefficient of determination, denoted as R-squared or R², is a statistical metric used to assess the goodness of fit of a regression model. It quantifies the proportion of the variance in the dependent variable that can be explained by the independent variables in the model. In simpler terms, it measures how well the regression model fits the observed data points.

Calculation:

R-squared is calculated by dividing the explained variance (the variance of the predicted values) by the total variance (the variance of the actual values). It ranges from 0 to 1, where:

The formula for R-squared is:

R² = 1 - (SS_residual / SS_total)

Where:

Interpretation: R-squared is a crucial metric for evaluating the quality of a regression model. Higher values of R-squared indicate a better fit of the model to the data, as it suggests that a larger proportion of the variance in the dependent variable is explained by the independent variables.

However, it is essential to be cautious while interpreting R-squared as a high value does not necessarily imply a good model. It is possible for a model to have a high R-squared value while still having biased or unreliable coefficients. Therefore, it is essential to combine R-squared with other model evaluation techniques like residual analysis, cross-validation, and significance tests for the model coefficients.

Limitations: R-squared has its limitations, and it may not always be the most appropriate metric for model evaluation, especially in complex regression scenarios. Some of its limitations include:

Overfitting: A high R-squared value doesn't guarantee that the model will perform well on new, unseen data. It could be overfitting the training data, capturing noise and irrelevant patterns.

Number of Predictors: R-squared tends to increase with the number of predictors, even if the additional predictors are not truly relevant to the model. This can lead to misleading interpretations, especially in multiple regression.

Non-linear Relationships: R-squared may not adequately capture the fit of models with non-linear relationships between the dependent and independent variables.

Outliers: R-squared is sensitive to the influence of outliers, and a few extreme data points can significantly impact its value.

Nested Models: When comparing models, a higher R-squared value does not necessarily mean that the more complex model is better. Model comparisons should consider degrees of freedom and other evaluation metrics.

In conclusion, while R-squared provides valuable information about the goodness of fit of a regression model, it should be complemented with other evaluation techniques to make a comprehensive assessment of the model's performance.