Published by: BhumiRaj Timalsina

Published date: 21 Jan 2022

The history of computer is also referred with its generation. Key technology development that vitally changed the way computers operate, resulting in increasingly smaller, cheaper, more powerful, efficient and reliable devices distinguished the generation of the computer. This division of computer according to the development period, memory, processing speed, efficiency, storage etc. is called computer generation. There are five computer generations:

Vacuum tubes were used for first generation computers for circuitry and magnetic drums for memory. They were very huge and expensive to operate. Due to the consumption of great deal of electricity, it generated a lot of heat that often caused malfunctioning in the system. Example: ENIAC, UNIVAC, MARK-1.

The transistors invented in 1947 which was not seen as extensive use, replaced vacuum tubes. The transistor was far superior to the vacuum tube that made computers to become smaller, faster, cheaper, more energy-efficient and more reliable that the first generation computers.eg. IBM 1401, UNIVAC-II, IBM 1620.

The development of the Integrated Circuit (IC) was the major turning point of the third generation computers. Transistors were made smaller and placed on silicon clips called semiconductors that drastically increased the speed and efficiency of computers. It was called integrated Circuit. Eg: IBM 360, PDP-8, etc.

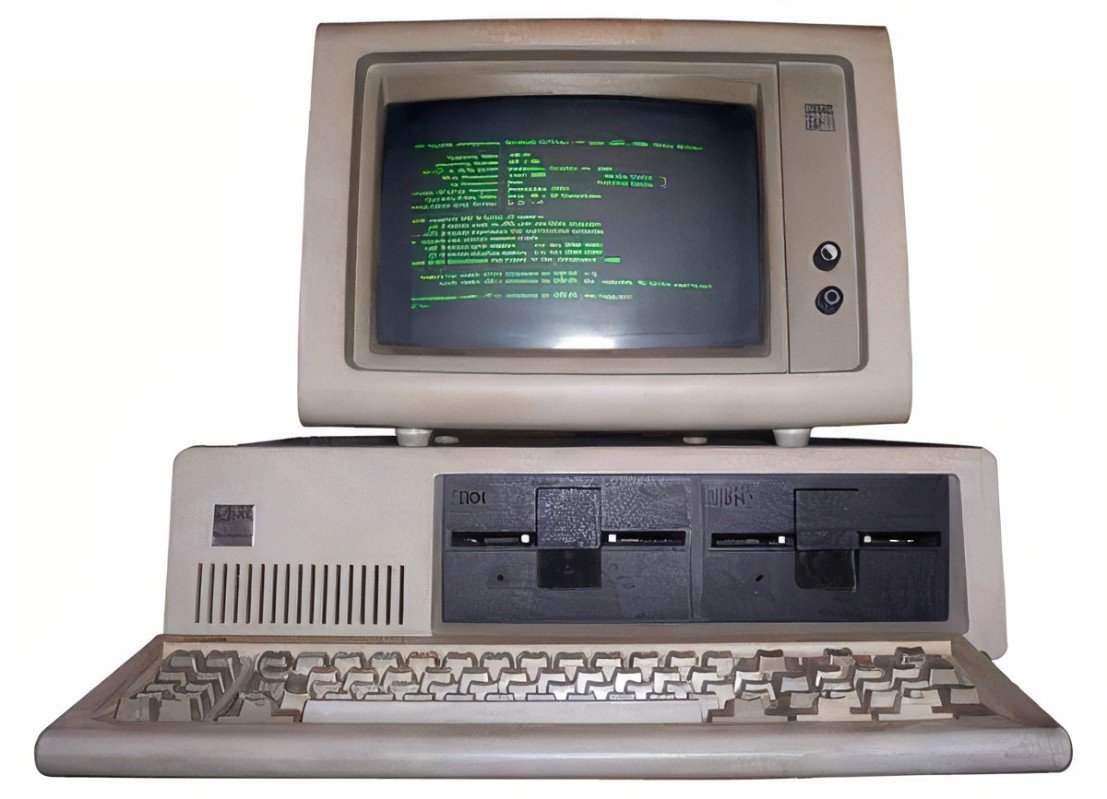

The development of microprocessor gave rise to the forth generation of computers. A microprocessor has thousands of integrated circuits builds onto a single silicon clips. The Intel 4004 chips, developed in 1971 is the first microprocessor.

The fifth generation computers are based on Artificial Intelligence (AI) and are still in development. After 90s computers that support Voice Recognition System (VRS) have been developed.

It is the biotechnology, which will be used in the fifth generation computer. A computer having AI will be able to understand natural language, think and make decisions.

In the simplest terms, AI which stands for artificial intelligence refers to systems or machines that mimic human intelligence to perform tasks and can iteratively improve themselves based on the information they collect. AI manifests in a number of forms. A few examples are:

AI is much more about the process and the capability for superpowered thinking and data analysis than it is about any particular format or function. Although AI brings up images of high-functioning, human-like robots taking over the world, AI isn’t intended to replace humans. It’s intended to significantly enhance human capabilities and contributions. That makes it a very valuable business asset.